SE Labs awards Coro's Email and Cloud Security a AAA Rating. Read the report

Endpoint Protection

Log all endpoint activity, analyze data anomalies, and automate resolution of security events

Email Protection

Scan emails for threats and remediate them automatically, drastically cutting management time

Network Protection

Protect networks and data with Zero Trust Network Access (ZTNA), VPN, enterprise and military-grade encryption

Cloud App Security

Protect users, cloud drives, and apps with advanced threat detection and robust remediation

Data Protection

Protect user and endpoint data from leaks, misuse, and unauthorized access across devices and cloud apps

Security Awareness Training

Train users to identify phishing and social engineering threats through real-world simulations

Coro Compass

Learn about our partner program, which invests in your business, not ours.

MSPs

Profitable, scalable cybersecurity for managed service providers.

Resellers

Drive revenue with in-demand security solutions.

Distributors

Simplified security offerings for tech service distributors & agents.

TSDs & Agents

Simplified security offerings for tech service distributors & agents.

Software Vendors

Empower your software with advanced cybersecurity.

Telecommunications & Media

Secure telecom services with robust solutions.

Become a Partner

Boost your business: Transform cybersecurity into revenue.

Partner Portal

Existing partners can manage their pipeline, register deals, and access essential sales and marketing resources.

Automotive

Achieve compliance and guard against threats.

Education

Keep educational institutions safe.

Finance

Protect data, transactions, and operations.

Government

Guard against threats to local and national agencies.

Healthcare

Meet regulatory requirements and protect privacy.

IT Service Providers

Optimize resources and secure organizations.

Manufacturing

Reduce risk and keep operations uninterrupted.

Software & Technology

Focus on innovation and not cyber threats.

Trucking

Secure transportation for the

road ahead.

road ahead.

Compliance

Learn how our cybersecurity solutions seamlessly align with and simplify adherence to the regulations relevant to your business.

Compliance Survey

Evaluate your compliance: Discover regulatory impacts on your business.

Glossary

Navigate our Glossary for clear definitions and detailed explanations of key cybersecurity concepts and terminology.

Interactive Demo

Step inside our interactive demo and explore Coro's powerful cybersecurity platform.

About us

Learn more about Coro and the people behind it.

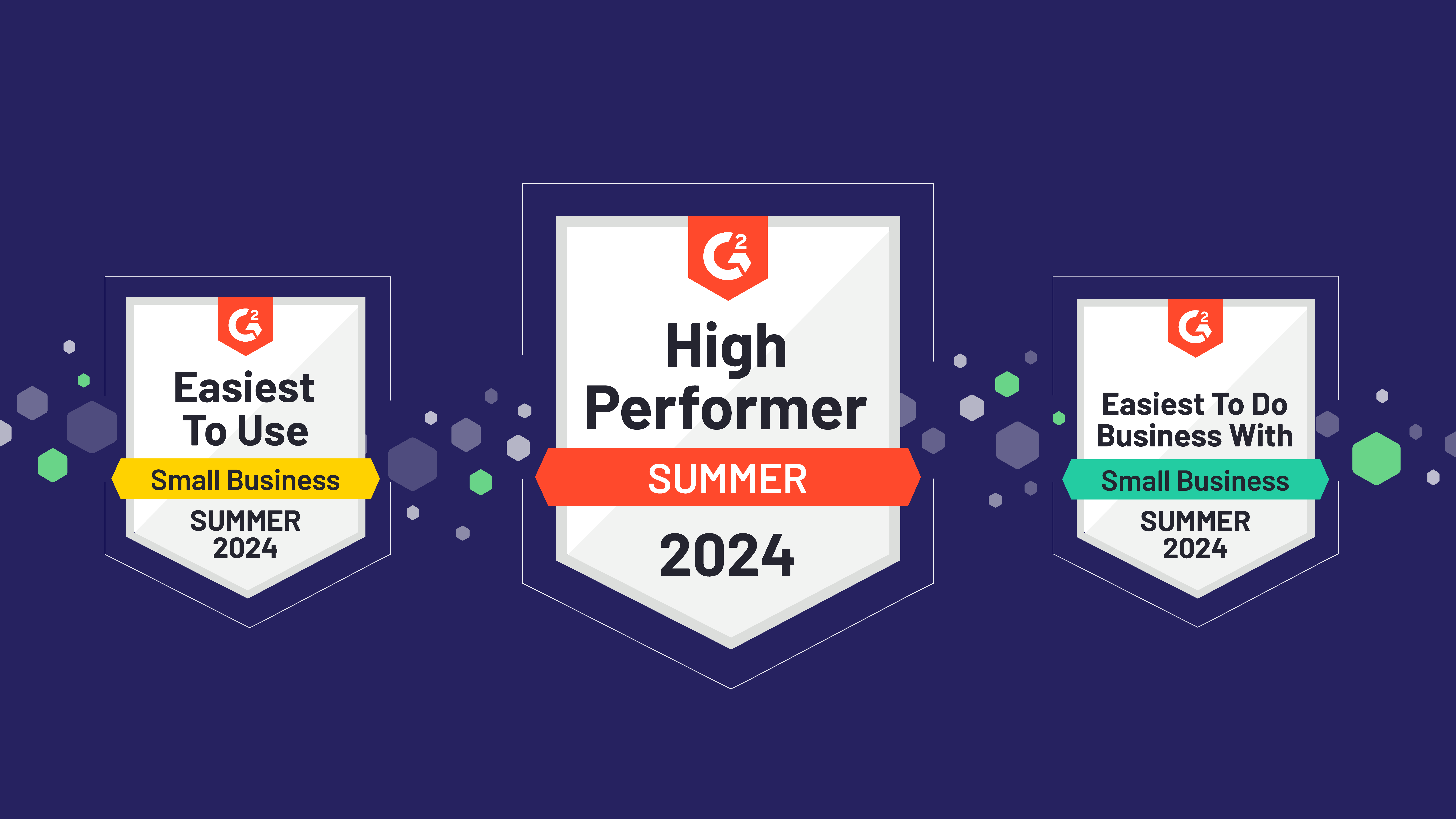

G2 Awards

Discover Coro’s G2 awards and recognitions.

Careers

Join the most innovative organization in cybersecurity.

Press

Catch up on the latest Coro news and updates.

Technical Documentation

Find solutions quickly: Explore our help and documentation.

Contact Support

Minimize risk, ensure continuous operations: Contact Coro Support today.

Contact SOC

Need immediate security assistance? Contact our SOC team.